In the spring I started a UX Design Course in Coursera (and will complete the certificate this month!).

And I’ve come to discover that the use of AI within the training has been captivating!

Going into this course, I had extensive experience as a Technical Writer and wanted to evolve my knowledge to include all aspects of working in user experience from research to surveys to documenting the process.

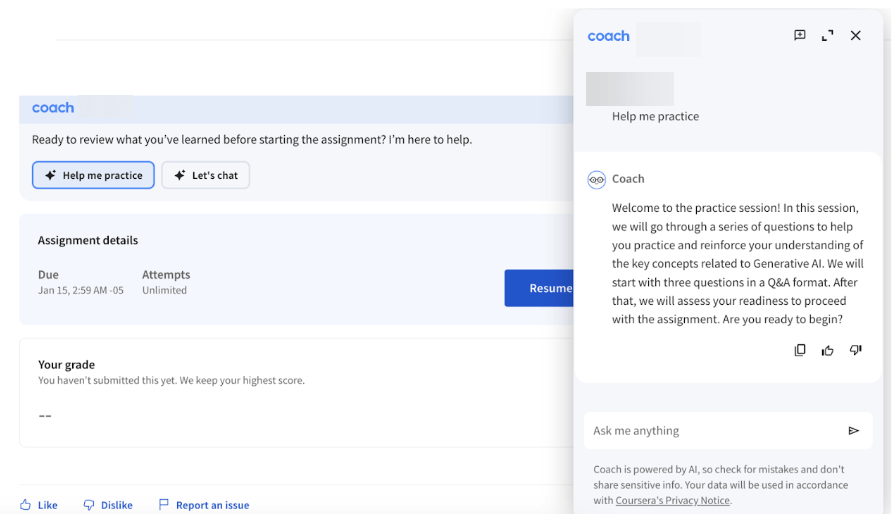

It has been a minute since I was part of academia – online or in person – so I needed to relearn some habits. One of them was studying and was intrigued to see that studying for a test on the platform included help with an AI coach. The coach would ask detailed questions and expect detailed responses.

As an example:

AI Coach: How would you connect the features and benefits of a product with the needs of its users?

Me: Connecting the features and benefits of a product with the needs of its users involves understanding the specific needs and pain points of the target audience and then demonstrating how the product’s features directly address those needs. It’s about showing the value that the product brings to the users.

AI Coach: Interesting response! While creating a value proposition is indeed important, let’s dive a bit deeper. Product features are the specific characteristics or functionalities of a product. They describe what the product can do or what it includes. On the other hand, product benefits are the positive outcomes or advantages that users gain from using the product. They answer the question, “What’s in it for me?” or “How does this product improve my life or solve my problem?”

Me: I’m ready for my test!

The AI Coach helps in so many ways to expand on what’s in the lesson and also in doing test preparation. It can assist with:

- In-depth review of the module

- Encourage critical thinking

- Ask for detailed responses

- Provided harder test questions (than the actual test)

In what unexpected ways has AI helped you think more critically and encouraged more learning options?